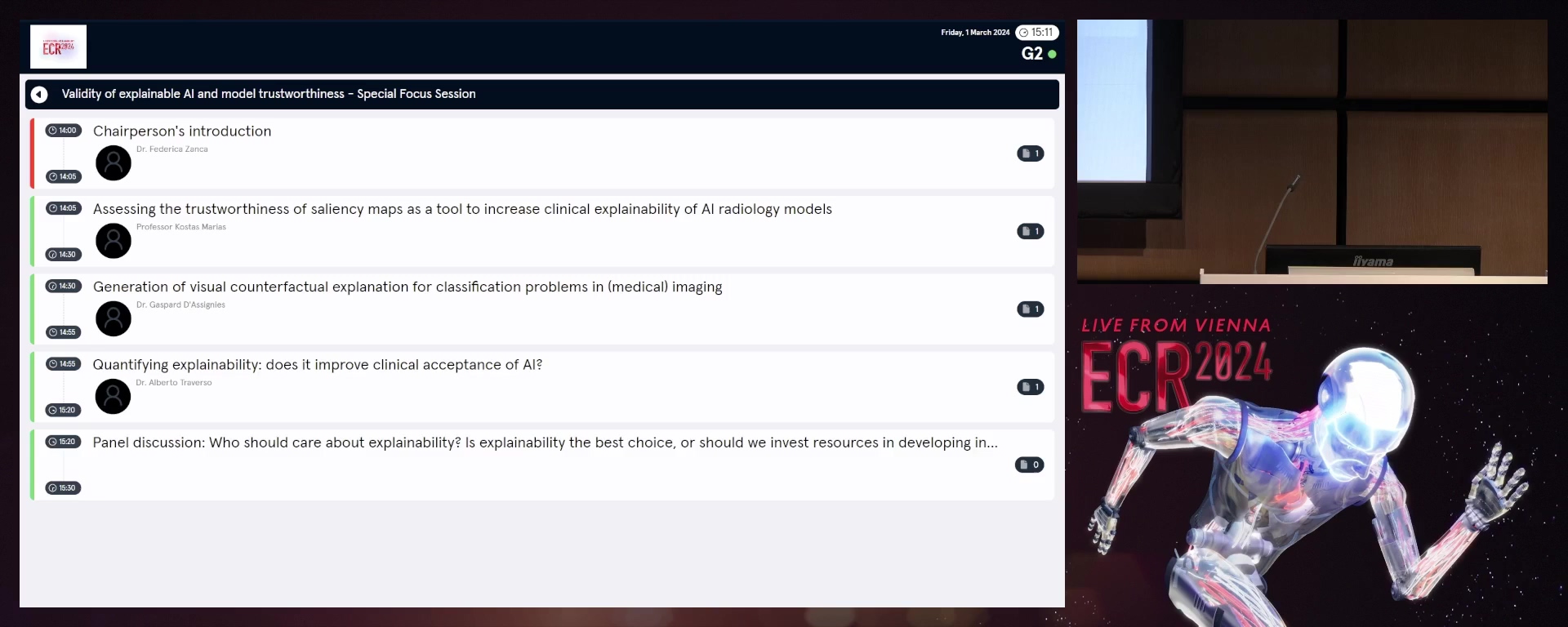

Special Focus Session

SF 15b - Validity of explainable AI and model trustworthiness

5 min

Chairperson's introduction

Federica Zanca, Leuven / Belgium

25 min

Assessing the trustworthiness of saliency maps as a tool to increase clinical explainability of AI radiology models

Kostas Marias, Heraklion, Crete / Greece

1. To present the most popular saliency map techniques.

2. To demonstrate current applications in radiology (e.g. highlighting regions of attention indicating pathology).

3. To describe how they can be used within the radiology workflow along with their current limitations.

2. To demonstrate current applications in radiology (e.g. highlighting regions of attention indicating pathology).

3. To describe how they can be used within the radiology workflow along with their current limitations.

25 min

Generation of visual counterfactual explanation for classification problems in (medical) imaging

Gaspard D'Assignies, Nantes / France

1. To understand this type of explanation and the link with the radiologist's practice.

2. To learn about methods with slightly different outputs that bring complementary information.

3. To be aware of the risks of each of these methods.

2. To learn about methods with slightly different outputs that bring complementary information.

3. To be aware of the risks of each of these methods.

25 min

Quantifying explainability: does it improve clinical acceptance of AI?

Alberto Traverso, Milan / Italy

1. To identify the most common explainability algorithms.

2. To list the clinical applications of such algorithms.

3. To understand the impact of such algorithms in clinical decision-making.

2. To list the clinical applications of such algorithms.

3. To understand the impact of such algorithms in clinical decision-making.

10 min

Panel discussion: Who should care about explainability? Is explainability the best choice, or should we invest resources in developing inherently interpretable and transparent models?